Hello 😉 Today, we are presenting and publishing an element of the playground’s. It is a classification model whose task is to determine the polarity of texts. We are publishing the model on our huggingface 😉 We define polarities as:

- positive — the model assigns this class if the information in the texts can evoke positive emotions/feelings;

- negative — if the information in the texts evokes negative feelings;

- ambivalent/neutral — if the information is neutral or evokes conflicting feelings at the same time;

Process: data and learning

The model was developed in two stages. However, unlike the standard approach (starting with annotation), we began by training the model based on existing annotations (hm… that’s nothing new… yes and no…). However, when looking for a dataset for polarization, most often these are datasets related to opinions on a given topic. In our case, it is not about determining the polarization of opinions (e.g., This product can be thrown in the trash! or I recommend this doctor because he has a very good approach to patients!), but about determining the polarization of information that the media (websites) feed us.

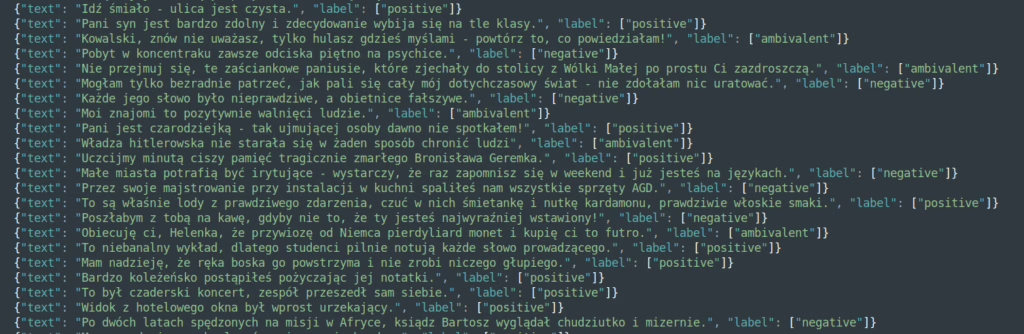

So we had to look elsewhere 😉 The choice was not so obvious, but it reflected the idea of polarization of information rather than opinion… and we settled on PlWordNet Emo. PlWordNet Emo is a selected part of PlWordNet’s marked with emotions (and more) (the markings are at the level of word meanings) — I recommend familiarizing yourself with what EmoPlWordNet is — it is a very valuable source of information. After a few technical steps, we transformed the examples of usage into meanings with emotional descriptions, and then into an approximate set of emotional polarization (reduction of emotions and granulation to 3 – positive, negative, ambivalent). Below are a few examples from the set, after conversion:

We used this collection to train the polarity3c-zero model, which was immediately used in the decision support process during annotation. The model provided real-time annotation suggestions for two annotators. This resulted in a collection of approximately 3,500 manual annotations, which were used for the final training of the model.

The final model is a simple architecture in which a simple classification layer (ClassificationHead) is added above the language model, which is trained to determine polarity. Classification layer architecture:

(classifier): RobertaClassificationHead(

(dense): Linear(in_features=1024, out_features=1024, bias=True)

(dropout): Dropout(p=0.1, inplace=False)

(out_proj): Linear(in_features=1024, out_features=3, bias=True)

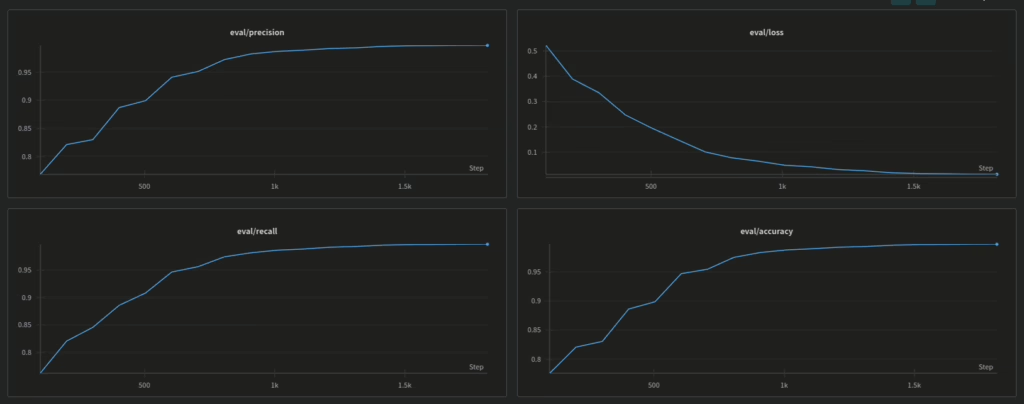

)1024 on the first dense layer of Linear is the output of the base model polish-roberta-large-v2, the output of the polarity3c model, is the layer marked (out_proj), which has 3 features at the output, i.e., our detected classes. The charts below show the basic metrics from training this model:

Launch and testing

The easiest way to run the model is to use the transformers library with a pipeline’a.

from transformers import pipeline

classifier = pipeline(model="radlab/polarity-3c", task="text-classification")The use of the model is equally simple:

classifier("Po upadku reżimu Asada w Syrii, mieszkańcy, borykający się z ubóstwem, zaczęli tłumnie poszukiwać skarbów, zachęceni legendami o zakopanych bogactwach i dostępnością wykrywaczy metali, które stały się popularnym towarem. Mimo, że działalność ta jest nielegalna, rząd przymyka oko, a sprzedawcy oferują urządzenia nawet dla dzieci. Poszukiwacze skupiają się na obszarach historycznych, wierząc w legendy o skarbach ukrytych przez starożytne cywilizacje i wojska osmańskie, choć eksperci ostrzegają przed fałszywymi monetami i kradzieżą artefaktów z muzeów.")In response, we will receive the value:

[{'label': 'ambivalent', 'score': 0.9994786381721497}]To read the full confidence distribution of the model, simply add the option specifying how many labels with the highest probability you want to receive. In our case, we have 3 labels, so we add the option top_k=3 to theclassifier to receive information about all classes:

classifier("Po upadku reżimu Asada w Syrii, mieszkańcy, borykający się z ubóstwem, zaczęli tłumnie poszukiwać skarbów, zachęceni legendami o zakopanych bogactwach i dostępnością wykrywaczy metali, które stały się popularnym towarem. Mimo, że działalność ta jest nielegalna, rząd przymyka oko, a sprzedawcy oferują urządzenia nawet dla dzieci. Poszukiwacze skupiają się na obszarach historycznych, wierząc w legendy o skarbach ukrytych przez starożytne cywilizacje i wojska osmańskie, choć eksperci ostrzegają przed fałszywymi monetami i kradzieżą artefaktów z muzeów.", top_k=3)And here’s the way out:

[{'label': 'ambivalent', 'score': 0.9994786381721497},

{'label': 'negative', 'score': 0.0002675618161447346},

{'label': 'positive', 'score': 0.0002538080152589828}]

Outro

The model has been available on our playground’s since July last year. We collect data on its performance, which can be viewed in the statistics. Information about polarization is also added to each new stream using this model. The model is, of course, available for free on our HF: here is the model.